Part 5 of Day 02 Setting up a Kubernetes Cluster (Master+Worker Node) using kubeadm on AWS EC2 Instances (Ubuntu 22.04)

Welcome to Part 5 of Day 02 of the #5DaysOfKubernetes challenge! Today, we’ll guide you through the process of setting up a Kubernetes cluster on a master and worker node. Let’s embark on this exciting journey of creating a Kubernetes environment!

Objectives

By the end of this tutorial, you will:

Create three EC2 instances with Ubuntu 22.04 and the necessary security group settings.

Configure the instances to prepare for Kubernetes installation.

Install Docker and Kubernetes components on all nodes.

Initialize the Kubernetes cluster on the master node.

Join the worker nodes in the cluster.

Deploy an Nginx application on the cluster for validation.

Step 1:

Create three EC2 Instances with the given configuration

Instance type- t2.medium

Ubuntu Version- 22.04

Create the keypair so you can connect to the instance using SSH.

Create the new Security group and once the instances are initialized/created then, make sure to add the Allow All traffic in inbound rule in the attached security group.

Rename the instances according to you. Currently, I am setting up the One Master and two Workers nodes.

After creating the instances, we have to configure the all instances. Let’s do that and follow the steps carefully.

Commands need to run on all Nodes(Master and Worker)

Once we log in the all three instances, run the following command.

Step 2:

sudo su

swapoff -a; sed -i '/swap/d' /etc/fstab

Step 3:

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# sysctl params required by setup, params persist across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

Step 4:

apt update

Step 5:

Install dependencies by running the command

sudo apt-get install -y apt-transport-https ca-certificates curl

Step 6:

Fetch the public key from Google to validate the Kubernetes packages once it will be installed.

curl -fsSL https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-archive-keyring.gpg

Step 7:

Add the Kubernetes package in the sources.list.d directory

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

Step 8:

Update the packages as we have added some keys and packages.

apt update

Step 9:

Install kubelet, kubeadm, kubectl and kubernets-cni

apt-get install -y kubelet kubeadm kubectl kubernetes-cni

Step 10:

This is one of the important dependencies to setting up the Master and Worker nodes. Installing docker.

apt install docker.io -y

Step 11:

Configuring containerd to ensure compatibility with Kubernetes

sudo mkdir /etc/containerd

sudo sh -c "containerd config default > /etc/containerd/config.toml"

sudo sed -i 's/ SystemdCgroup = false/ SystemdCgroup = true/' /etc/containerd/config.toml

Step 12:

Restart containerd, kubelet, and enable kubelet so when we reboot our machine the nodes will restart it as well and connect properly.

systemctl restart containerd.service

systemctl restart kubelet.service

systemctl enable kubelet.service

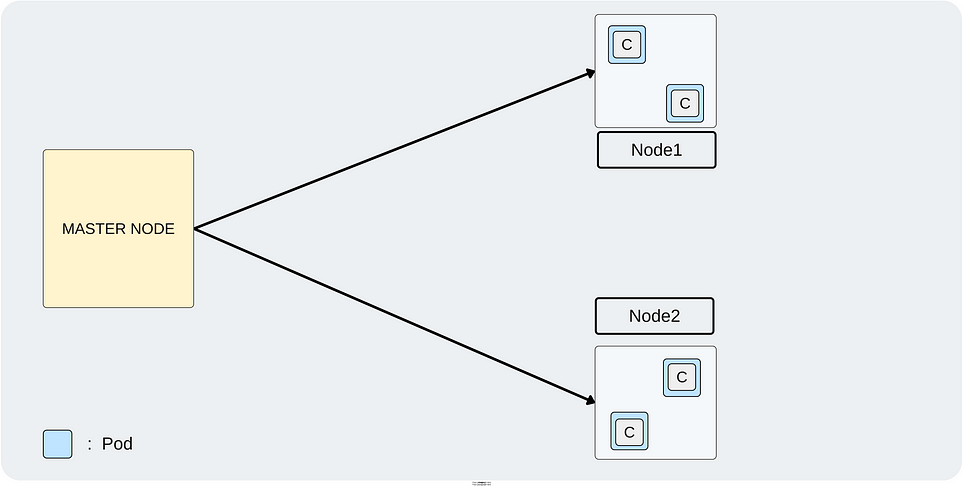

Now, we have completed the installation of the things that are needed on both nodes (Master and Worker). But in the next steps, we have to configure things only on the Master Node.

Only on the Master Node

Step 13:

Initialize the Kubernetes cluster and it will pull some images such as kube-apiserver, kube-controller, and many other important components.

kubeadm config images pull

Step 14:

Now, initialize the Kubernetes cluster which will give you the token or command to connect with this Master node from the Worker node. At the end of this command, you will get some commands that need to run and at the bottom, you will get the kubeadm join command that will be run from the Worker Node to connect with the Master Node. I have highlighted the commands in the second next snipped. Please keep the kubeadm join command somewhere in the notepad.

kubeadm init

Keep the kubeadm join command in your notepad or somewhere for later.

Step 15:

As you have to manage the cluster that’s why you need to create a .kube file copy it to the given directory and change the ownership of the file.

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Step 16:

Verify the kubeconfig by kube-system which will list all the pods. If you observe, there are starting twopods that are not ready status because the network plugin is not installed.

kubectl get po -n kube-system

Step 17:

Verify all the cluster component health statuses

kubectl get --raw='/readyz?verbose'

Step 18:

Check the cluster-info

kubectl cluster-info

Step 19:

To install the network plugin on the Master node

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml

Step 20:

Now, If you run the below command, you will observe the two remaining pods are in ready status which means we are ready to bootstrap by our Workers Node or connect to the Master node through the Worker Node.

kubectl get po -n kube-system

Step 21:

Now, you have to run the command on Worker Node1. If you remember, I told you to keep the command somewhere in Step 14. Here you have to use your command because your token is different as well as mine.

Worker Node1

kubeadm join 10.0.0.15:6443 — token 6c8w3o.5r89f9cfbhiunrep — discovery-token-ca-cert-hash sha256:eec45092dc7341079fc9f2a3399ad6089ed9e86d4eec950ac541363dbc87e6aa

Step 22:

Follow the Step 21.

Worker Node2

kubeadm join 10.0.0.15:6443 --token 6c8w3o.5r89f9cfbhiunrep --discovery-token-ca-cert-hash sha256:eec45092dc7341079fc9f2a3399ad6089ed9e86d4eec950ac541363dbc87e6aa

Step 23:

Now, from here all the commands will be run on Master Node only.

If you run the below command you will see that the Worker Nodes are present with their respective Private IPs and it is in Ready. status

kubectl get nodes

Here, we have completed our Setup of Master and Worker Node. Now let’s try to deploy a simple nginx pod on both worker nodes.

Step 23:

Run the command, which includes the deployment file to deploy nginx on both worker Node.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

EOF

Step 24:

To expose your deployment on NodePort 32000 which means you can access your nginx application on port 32000 through your browser easily.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 32000

EOF

Step 25:

Now, check the pods by the below command and you can see that your pods are in running status.

kubectl get pods

Step 26:

On Worker Node1

You can validate your deployment by copying the Public IP of your WorkerNode1 and adding a colon(:) with port(32000).

On Worker Node2

You can validate your deployment by copying the Public IP of your WorkerNode2 and adding a colon(:) with port(32000).

Conclusion:

Today, you’ve successfully set up a Kubernetes cluster with a master and two worker nodes. This foundational step is crucial for your Kubernetes journey.